Over the course of several days in October we detected and disrupted a highly automated cyber operation that had attempted to turn our AI software development platform into one node within a worldwide mesh of "off‑label" LLM usage. The attackers used AI coding agents to generate and maintain their infrastructure, to adapt to our defenses in real time, and to orchestrate traffic from tens of thousands of synthetic organizations.

The goal of this attack was to exploit free compute at scale by chaining together free usage from multiple AI products and reselling that access and using it to mask a broad range of activity, including cyber‑crime. This attack coincided with similar incidents across the industry, including those recently disclosed by Anthropic. The methods and level of automation we observed have direct implications for how state‑linked and organized cybercriminals will operate in the future.

We are sharing what we saw, how we responded, and what we learned, in the hope that it helps others harden their own systems.

Detecting the initial attack

The first sign of trouble was not an alert from a security scanner; it was a simple observation from Stepan, one of our members of technical staff.

On the evening of October 11, Stepan pinged our internal channel about unusual patterns of traffic in our model inference costs. At the same time, we were seeing large numbers of new organizations onboard and use Droid in perfectly normal ways. From a distance, this behavior could have been indicative of a broader audience increasing the breadth and volume of their usage.

The closer we looked, the less that story held together.

When we pulled raw inference logs and compared them to product analytics, we saw that more than twenty thousand of the organizations created over a three‑day window were sending model traffic that never showed the usual fingerprints of the Droid client. Their requests modeled legitimate traffic. It hit the same endpoints and used the same APIs, but lacked internal signals that most genuine users produce as they navigate the interface. Those signals were not designed as a fraud marker; they were simply a side effect of how the client talks to the back end. In this case, they told us that a large cohort of "users" was interacting with Droid in a fundamentally different way.

At that point we knew this was not just enthusiasm from real customers. What we did not yet understand was who was behind it, how they were operating, or how quickly they could adapt.

Who was attacking us?

A large share of the abusive volume was originating from data centers and consumer ISPs associated with mainland China, Russia, and Southeast Asia. Many overlapped with networks that have been mentioned repeatedly in public reporting on Chinese‑based cyber operations.

Based on the coordination and scale of the activity, the infrastructure we observed, the overlap with known malicious networks, and the degree of automation in the tools, we assess with high confidence that the attack was conducted by a large, China‑based operation. We also have specific indicators that at least one state‑linked actor was involved. We have shared technical indicators, timelines, and our assessment with the relevant security and regulatory bodies.

Ultimately, though, the most important characteristic of the threat was not its origin but its method: this was an AI‑orchestrated operation, built and maintained at a speed and level of sophistication that are hard to achieve with human labor alone.

The capabilities the attackers leaned on

What made this campaign unusual was the kind of work the models were doing on the attackers' behalf. Modern coding agents can absorb long, messy descriptions, reason over large codebases and logs, and synthesize working infrastructure from that context. They can be kept in the loop as systems run: read error traces, propose changes, and revise code over and over. And because they are typically wired into real tools: source control, deployment pipelines, log stores, HTTP clients. Their output can move from prompt to production very quickly.

The group behind this fraud operation leaned into those strengths. From our analysis of public repositories, commit histories, and internal logs, we saw AI systems used to generate proxy servers and key‑rotation logic, to author OAuth flows and automated signup scripts, to inspect error logs and suggest patches when our defenses blocked them, and to obfuscate traffic in ways that were nearly invisible to human reviewers.

In practice, they were treating coding agents as programmable junior engineers: highly capable, inexpensive, and tireless.

How the fraud operation worked

The attackers' first step was to map our billing and onboarding flows.

They identified that we provided a free trial of tokens for new organizations; that trials could be created via a self‑serve path with human‑security checks, SMS verification, and rate limiting; and that specific signatures used by the Droid client are reproducible without the original droid code.

They then built an automation framework that treated Droid - and at least two other major coding agents - as interchangeable backends behind their own proxy layer. From their perspective, the "product" was not an interface or collaboration experience. It was raw access to model inference at a deeply discounted price.

The core of their system was a network of HTTP proxies and control servers spread across cloud providers and VPN networks worldwide. We know, from the code and commit metadata we recovered, that those proxies were largely written by AI. In several public repositories we found scripts and configuration files whose structure and comments matched the output of modern coding agents, commits with messages explicitly co‑attributing changes to automated assistants, and rapid iteration cycles (e.g. major features appearing in hours, not days) that are consistent with agentic development.

The infrastructure supported automated creation of accounts and organizations across multiple providers, redemption of trials and promotions as soon as they became available, health checking and key rotation when a provider banned or throttled a key, and routing logic that could shift traffic away from Droid moment‑to‑moment as our defenses tightened. In effect, they had built a meta‑client: a system that sat on top of several AI platforms, abstracted away their differences, and treated them as a combined pool of tokens to be harvested.

We began tightening checks as soon as we understood the scale of abuse. But because the attackers had embedded AI deeply into their own stack, they could adapt at an unusually high tempo.

We watched, over a matter of days, as they mimicked legitimate traffic by shaping their proxy requests to match our expected headers, payloads, and timings; rotated through aggressive VPN and residential proxy networks to change IPs frequently, often retiring an address after only a handful of requests; chained together referral flows, cancellation paths, and promotion logic in ways that our original design had not anticipated; and implemented tricks like inserting zero‑width spaces and other non‑printing characters into fields that our systems treated as identifiers. To a human scanning logs, "create_organization" and "crea�te_organi�zation" look identical; to a naive matching algorithm, they are different strings.

The speed of these changes suggested that a human operator was not sitting down to write each patch by hand. Instead, logs and error messages were being fed into coding agents, which then proposed code changes that could be deployed directly.

By infiltrating some of the Telegram channels used to coordinate the activity, we saw how the infrastructure was being used. Participants openly advertised free or heavily discounted access to premium coding assistants, bundled keys and endpoints that allowed downstream customers to call the same back‑ends without creating their own accounts, and tools and tutorials for integrating those proxies into bots and automated workflows.

The use cases discussed ranged widely. Many were benign but economically parasitic: large‑scale role‑playing, content generation, experimentation. Others clearly pushed into cyber‑crime: credential stuffing, vulnerability research against third‑party targets, and attempts to mask the source of distributed attacks by funneling them through these stolen trials.

In all of these scenarios, the attackers' incentives were the same: convert inference from well-known coding providers into a fungible commodity, resell or use that commodity themselves, and use the resulting mesh of accounts, IPs, and keys to hide the origin of future operations. From their own documentation, we know that the same framework was configured to target multiple frontier coding agent companies in parallel. Error messages, configuration flags, and channel discussions make us highly confident that at least two other large platforms experienced similar abuse during this period.

Using Droid to defend Droid

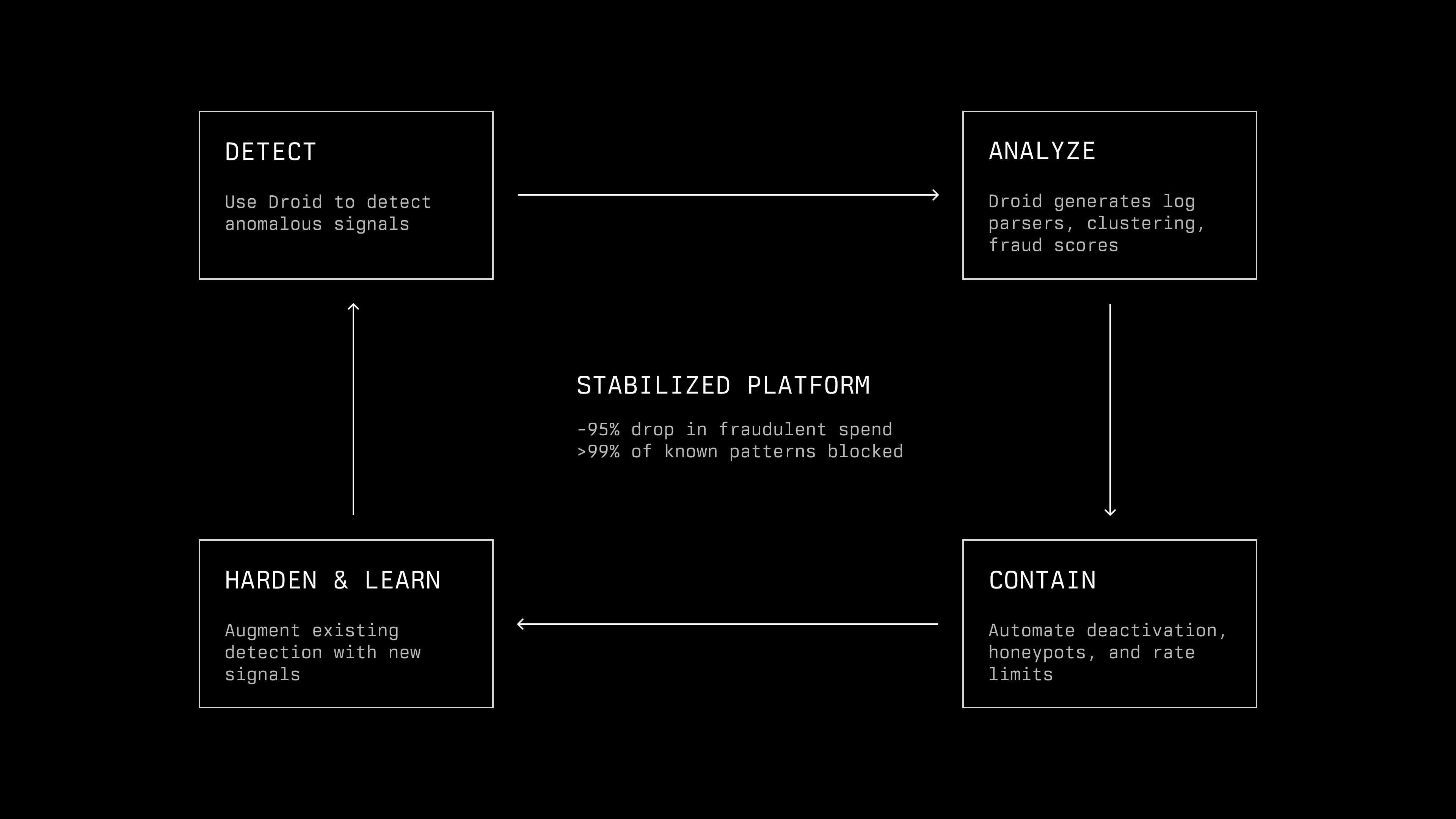

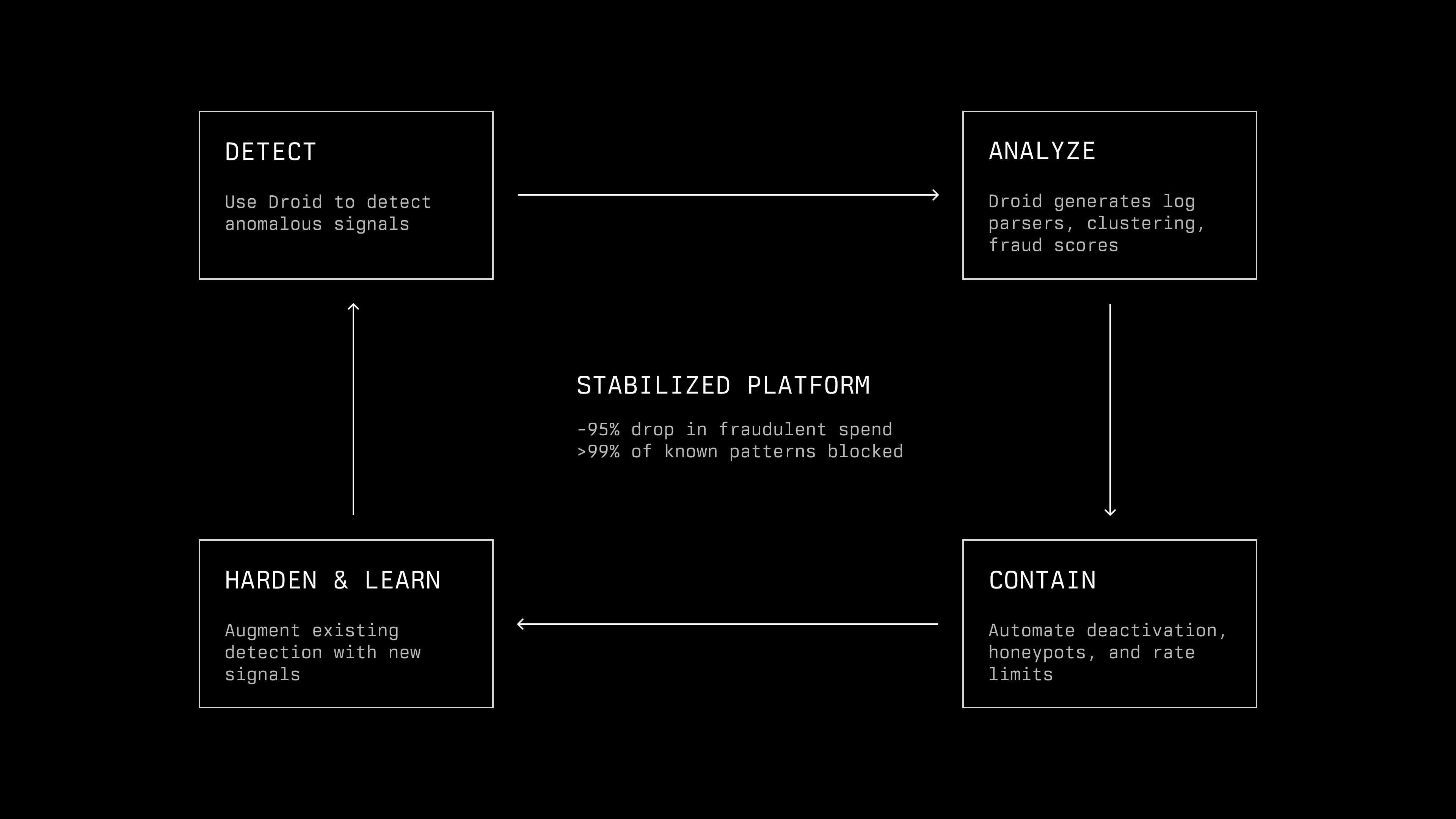

Manually addressing these attacks were not feasible at the volume we were seeing. To keep pace with an AI‑augmented attacker, we needed AI‑augmented defense. We turned to the same system the attackers had been repurposing: Droid.

Stepan and David connected Droid to realtime log monitoring systems and tasked it with observing and identifying fraudulent access patterns. Droid built a group of fraud classifiers designed to respond to new fraud patterns as they emerged in real-time. Then Droid was used to create an automated enforcement mechanism that could run these classifiers and immediately block fraudulent accounts upon detection. The system was designed to evolve continuously. When new fraud patterns emerged, Droid would assess logs and add corresponding classifiers to the detection arsenal. Remarkably, the entire fraud-protection system built by Droid went live within hours, achieving near-zero false positives while ensuring legitimate users remained unaffected.

We ran this system live to disable abusive organizations, but left a small set of fraudulent accounts active as honeypots, watching how their behavior shifted as we closed specific loopholes. Those accounts confirmed that, as we increased friction on Droid, traffic was relocating to other providers rather than simply disappearing.

Throughout, our support and community staff worked closely with engineering to ensure that legitimate users who were mistakenly flagged could have access restored quickly, and to explain why new verification steps were appearing.

Outcomes and what we learned

Our internal metrics showed that fraudulent LLM consumption had fallen by roughly 95% and that the vast majority of abuse techniques were being blocked before they could incur meaningful cost. More importantly, we had moved our defensive posture into an AI‑native regime. We built systems that could ingest and reason over large volumes of logs quickly, automated workflows that could contain abuse as soon as it was detected.

This episode has strengthened our conviction on three points.

First, AI is now operational in cyber‑crime. The attackers we faced were not relying on human engineers to write every line of code. They were using coding agents, including ours, as high‑leverage operators.

Second, the platforms that accelerate development will be targeted. Any system that offers powerful models, free access, and flexible integration surfaces will be viewed as an attractive substrate for both organized crime and state‑linked groups.

Third, defense must be AI‑assisted as well. Trying to counter an AI‑enabled adversary with purely manual processes is not realistic. In this incident, Droid itself was essential for investigation and mitigation.

This campaign is not unique. Based on what we saw in configuration files, forums, and behavior, there is substantial evidence that at least two other frontier coding agents experienced similar abuse during this period, and that the same families of tools are being pointed at other services today.

We believe transparency strengthens the ecosystem. The techniques used here: proxy abstraction, AI‑generated infrastructure, invisible obfuscation, and rapid evasion are likely to recur. Many organizations building with Droid are also operating their own APIs and trial systems. Understanding how our systems were abused may help them avoid similar pitfalls. As attackers increasingly use AI agents to accelerate and scale their cyber-crime operations, organizations must adopt AI-powered defense systems to maintain parity. The speed differential demonstrated here (hours versus weeks) illustrates that traditional security approaches can no longer keep pace with AI-enhanced threats. The only realistic defense against AI-accelerated attacks is to deploy equally sophisticated AI defenders.